The new breed of hostile bots (and how to defeat them)

Author: Tzury Bar Yochay, CTO and co-founder of Reblaze

The new breed of hostile bots - When a cyberattack appears in the news, it’s usually one of two things:

- a massive DDoS, or…

- a high-profile data breach.

Thus, when people discuss cybersecurity, they tend to focus on these two types of threats.

This is a mistake. DDoS and breaches are not isolated problems. They are actually symptoms of a larger and more important problem: an inability to identify (and therefore, an inability to block) hostile automated traffic.

Sites and web applications must process a constant stream of incoming http/s requests. Some of those requests come from human users, while the rest are generated by automated software “users”—in other words, bots.

Some cyberattacks consist entirely of automated traffic while others use bots in their early stages (for example, to perform vulnerability scans) before human attackers get directly involved.

Therefore, to maintain effective web security, an organization must be able to accurately identify the bots in their incoming traffic stream. This is arguably the most important, and the most challenging, task that a security solution must perform.

Overrun by bots

Today, most web traffic is automated. Our analytics at Reblaze include hundreds of global deployments, processing over three billion http/s requests per day—showing that, on average, only 38 percent of incoming requests come from humans.

The remaining 62 percent is automated traffic, not all of which is hostile; some of the bots, such as search engine spiders, are welcome on many sites. These account for 24 percent of overall traffic.

Thus, on average, 38 percent of Internet traffic consists of hostile bots. What’s worse is that this number has risen steadily over the last few years, with no sign of relenting.

Why it matters

Malicious bots can damage an organization in multiple ways, both directly (via revenue loss) and indirectly (via reputation loss). Here are some of the ways this can occur.

- Site Downtime: DDoS attacks consist of automated traffic. When they are successful, your sites and web applications are unavailable to legitimate users.

- Data Theft: Competitors can use bots to scrape prices and other sensitive data.

- Content Theft: Some business models depend on aggregated data that is costly to acquire and maintain. Bots can scrape this data and make it available to others.

- Site Breaches: Bots are used in vulnerability scans and pen tests. When one of them succeeds, a human attacker will soon arrive.

- Inventory Hoarding: Bots are used to “shop” on ecommerce sites, placing items into shopping carts without ever concluding the purchase. This can make the items unavailable to legitimate customers.

- Account Theft: Credential stuffing and other mass-login attacks are waged by bots.

- Card Testing: Bots are used to test stolen credit card numbers through fraudulent transactions.

These cause direct losses through chargebacks, logistics costs, and unrecoverable shipped goods.

- Degraded Customer Experience: Heavy bot traffic consumes bandwidth and adds latency, which degrades the experience of your actual customers.

Evading traditional detection

To identify bots, traditional methods include signature detection, blacklisting, rate limiting, CAPTCHA/reCAPTCHA challenges, and Javascript injection.

Modern bots can evade all of these. For example, signature detection is avoided by spoofing.

Blacklisting and rate limiting are evaded by rotating IP addresses.

CAPTCHA puzzles are straightforward for bots to solve, which is why reCAPTCHA was introduced to replace them. But even these challenges are becoming less useful. A few years ago, several Columbia University researchers published a method to solve reCAPTCHA automatically over 70 percent of the time.

Javascript injection detects older bots: simple automated crawlers without Javascript capability.

Modern tools (for example, PhantomJS and CasperJS) behave more like actual browsers.

Increasing sophistication

Bot developers do not focus merely on evading existing detection methods. They are continuously pushing forward the ‘state of the art’ for automated malware.

Roughly three-quarters of all malicious bots are now Advanced Persistent Bots (APBs). They cycle through random IP addresses, enter networks through anonymous proxies, change their identities frequently, and do a credible job of mimicking human behavior.

Increasing volume

In addition to their increasing sophistication, modern bots have also become far more numerous.

Threat actors have built enormous botnets, the largest of which contain millions of devices, and are constantly trying to expand them.

They are also quite creative in leveraging other resources into attack tools. For example, cellular gateways are now being abused to continually provide new IP addresses for massed bot attacks. Cellular networks maintain large pools of IPs in order to handle their enormous volume of daily requests, and any given address can be used and re-used thousands of times per day.

Also, these networks are intended to be used by mobile devices which can frequently change location and transfer between networks.

All this is ideal for concealing malicious bot activity. Gateway IPs are almost always assigned to legitimate devices, so blacklisting them for bot activity is not an option. Nor can a security solution block a specific requestor based on frequent changes of IP or geolocation, because users on mobile devices will often do this.

These and other factors make bot detection extremely challenging today.

The new breed of hostile bots - How to identify them

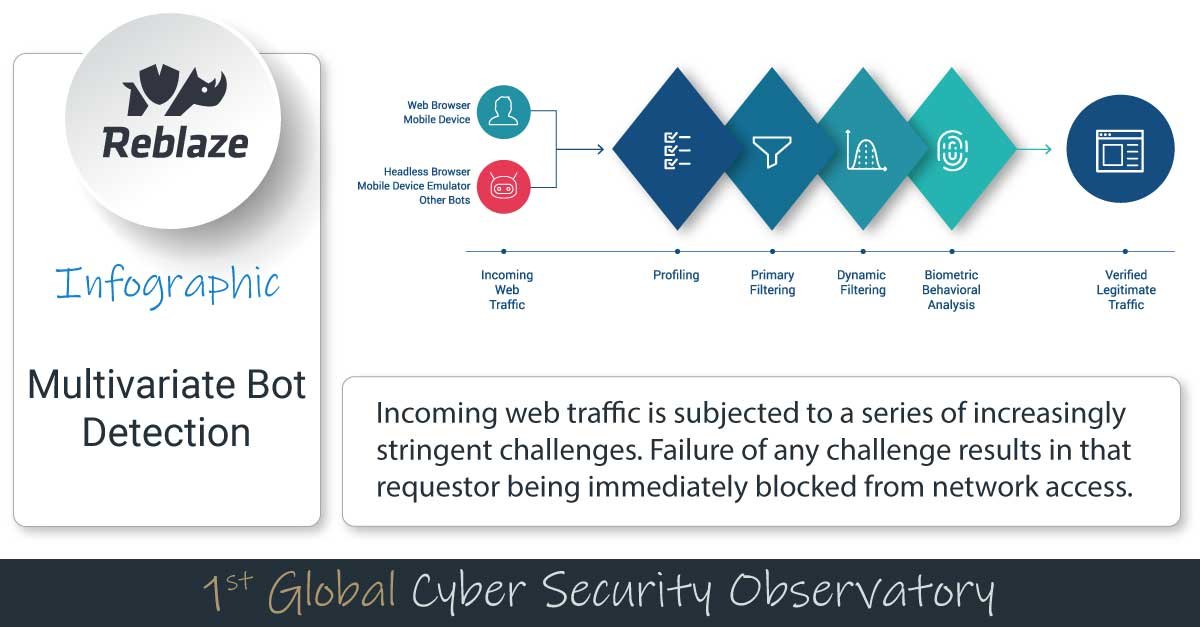

To accurately detect modern bots, a multi-dimensional approach is needed. Different identification methods will catch different types of bots. Therefore, achieving a secure environment requires using them all. Doing this at scale with near-zero latency requires an architecture designed for that purpose.

For discussion purposes, I’ll use the Reblaze platform as an example of this. At Reblaze, incoming traffic is subjected to an increasingly strict series of challenges. The initial stages detect and exclude large groups of bots with minimal processing. Stages which require more intensive scrutiny then follow, once the remaining request volume is much smaller.

For example, the first filtering stage uses precise ACLs (Access Control Lists) to identify and block requests which meet certain criteria. These criteria include geolocation, network of origin, anonymizer or proxy usage, and more—metrics which are instantly available for each request.

Out of the box, Reblaze includes a set of ACLs which catches roughly 75 percent of global bot traffic. This allows the platform to instantly exclude three-quarters of hostile bots with no processing workload.

Filtering continues with more advanced challenges, including request validation and advanced browser environment identification. Next is Primary Filtering, which includes blacklisting, rate limiting, signature detection, payload data integrity verification, and positive-security enforcement.

(You’ve probably noticed that some of these are the traditional methods discussed earlier.

Although they are not sufficient by themselves to detect all bot traffic, they are still useful in this multi-dimensional context; they allow large tranches of older bots to be quickly eliminated with minimal processing workload.)

Requests that pass the first two stages are subjected to Dynamic Filtering. Usage of various network resources (data, URLs, and so on) is tracked over time. Requestors that display anomalous consumption patterns (in terms of quantity, pace, rhythm, types, methods, etc.) are blocked.

The fourth and final filtering stage is Biometric Behavioral Analysis. For each application that Reblaze protects, the platform uses Machine Learning to build a sophisticated, comprehensive behavioral profile of legitimate users. It learns and understands how authentic users interact with each app: their device and browser statistics, the typical analytics and metrics of each session, the interface events they generate (mouse clicks, screen taps, scrolls, zooms, etc.), and much more. As a result, Reblaze understands how actual humans interact with the web apps it is protecting, and it continually verifies that incoming requests are consistent with these patterns. Deviations from them indicates that the requestor has hostile intent, and therefore, is to be blocked.

Note that the most computationally-intensive part of this process (using Machine Learning to construct behavioral profiles) does not need to be done inline during traffic filtering; it is a long-term analysis of collective user interactions with an application. Therefore, it is done in a separate process, and only the actual verification of a requestor’s behavior is performed inline.

These sorts of optimizations, when applied to the four stages above, allow the correct identification of malicious bots with negligible latency (averaging about one-half of a millisecond). Therefore, this multi-dimensional process can be applied to all incoming traffic instantaneously, without affecting the responsiveness of the protected web applications.

The new breed of hostile bots - Conclusion

Accurate bot detection is one of the most challenging, and most important, aspects of web security today.

This article used a specific security platform (Reblaze) as an example of a successful approach to solving this problem. The purpose of this article is not to claim that Reblaze’s specific approach is the only correct one.

Instead, it is to show the technological depth that is required for a platform which can successfully detect and defeat hostile bots today. Executives should consider this when evaluating web security solutions for their organizations.

About Tzury Bar Yochay

Tzury Bar Yochay is the CTO and co-founder of Reblaze. Having served in technical leadership in several software companies, Tzury founded Reblaze to pioneer an innovative new approach to cyber security. Tzury has more than 20 years of experience in the software industry, holding R&D and senior technical roles in various companies. Prior to founding Reblaze, he also founded Regulus Labs, a network software company. As a thought leader in security technologies, Tzury is frequently invited to present at industry conferences around the globe.

Follow Us